Artificial Intelligence (AI) has made significant strides over the past few decades, transforming industries and revolutionizing how we interact with technology. One of the key advancements in AI is the development of Cascade and Parallel Convolutional Recurrent Neural Networks (CP-C-RNNs). These models combine the strengths of Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs) to process sequential data more efficiently.

In this article, we’ll explore the fundamentals of CNNs and RNNs, explore the cascading and parallel architecture approaches, and highlight how Cascade and Parallel Convolutional Recurrent Neural Networks are shaping the future of AI.

Overview of AI and Neural Networks

AI has evolved significantly from simple rule-based systems to sophisticated machine learning and deep learning models. Neural networks play a central role in this revolution by mimicking the human brain’s architecture to process and learn from data.

- Brief History and Evolution of AI Technologies: AI began with basic algorithms and progressed towards machine learning and deep learning, including neural networks like CNNs and RNNs.

- The Importance of Neural Networks in Advancing AI: Neural networks enable machines to recognize patterns, make predictions, and perform tasks without explicit programming.

- Introduction to RNNs and CNNs: While CNNs excel at handling spatial data, such as images, RNNs excel at processing sequential data, like time series or language.

Purpose of Combining Cascade and Parallel Approaches

The combination of cascade and parallel architectures in neural networks aims to harness the strengths of both approaches.

- Why Combining Cascade and Parallel Architectures Is Important: Cascade architecture processes data step-by-step, while parallel architecture processes multiple data points simultaneously. Together, they maximize computational efficiency and improve network performance.

- How These Techniques Improve Neural Network Performance: Using both architectures, we can handle complex data more efficiently, reduce computational load, and improve accuracy.

Fundamentals of Convolutional Neural Networks (CNNs)

What Are CNNs?

CNNs are a deep learning model that processes data with grid-like topology, such as images. They use filters, convolutional, and pooling layers to extract features from raw input data automatically.

- Definition and Components: CNNs consist of multiple layers:

- Convolutional Layers extract features like edges or textures.

- Pooling Layers reduce the spatial dimensions.

- Fully Connected Layers classify or predict based on the features.

- Basic Functionality of CNNs in Image Processing: CNNs are particularly useful in tasks like object detection and facial recognition due to their ability to extract hierarchical features.

Why CNNs Are Important for Sequential Data

CNNs’ ability to capture spatial features can also be applied to sequential data. By leveraging CNNs in Cascade and Parallel Convolutional Recurrent Neural Networks, we enhance feature extraction in sequential tasks, such as language modeling or time-series analysis.

Fundamentals of Recurrent Neural Networks (RNNs)

What Are RNNs?

Unlike traditional neural networks, RNNs have an internal feedback loop that allows them to retain information over time. This makes them ideal for tasks that involve sequences, such as speech recognition or text prediction.

- The Concept of Feedback Loops and Memory: RNNs process data sequentially, using feedback loops to store information from previous steps in the sequence.

- How RNNs Process Sequential Data: Each input in a sequence influences the output, making RNNs perfect for tasks where context matters.

Challenges in RNNs

While RNNs are powerful, they face some challenges:

- Vanishing and Exploding Gradients: RNNs struggle to learn long-term dependencies due to the vanishing gradient problem.

- Solutions like LSTM and GRU: Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRU) are two solutions designed to combat these issues and help RNNs learn long sequences.

Cascade and Parallel Architectures

What Is Cascade Architecture?

In cascade networks, data is passed sequentially through layers, each of which refines the information. This hierarchical approach allows for step-by-step learning, improving accuracy and processing efficiency.

- How Cascade Networks Process Data: Data flows through multiple stages, where each stage refines its understanding before passing the refined information to the next layer.

What Is Parallel Architecture?

Parallel processing involves handling multiple data inputs simultaneously, speeding up computations significantly.

- How Parallel Architectures Enhance Efficiency and Scalability: Parallel networks improve the performance of neural networks, especially in applications like real-time data processing.

- Examples of Parallel Approaches in Neural Networks: Modern GPUs and cloud computing support parallel processing, allowing massive datasets to be processed faster.

Cascade and Parallel Convolutional Recurrent Neural Networks (CP-C-RNNs)

What Are Cascade and Parallel Convolutional RNNs?

Cascade and Parallel Convolutional Recurrent Neural Networks combine CNNs for feature extraction and RNNs for sequential data processing. By integrating cascade and parallel architectures, these models are highly efficient in handling complex tasks.

- Benefits of Combining CNNs with RNNs: CNNs excel in feature extraction, while RNNs provide the memory needed for sequential data. Together, they handle a variety of applications, such as time-series prediction and language processing.

How Cascade and Parallel Approaches Improve Sequential Data Processing

- Cascade Improves Long-Term Dependency Handling: Cascade architecture helps RNNs capture long-term dependencies across data points by processing data step-by-step.

- Parallelization Speeds Up Computation: Parallel processing speeds up the training of complex models, making real-time applications more feasible.

Applications of Cascade and Parallel Convolutional RNNs

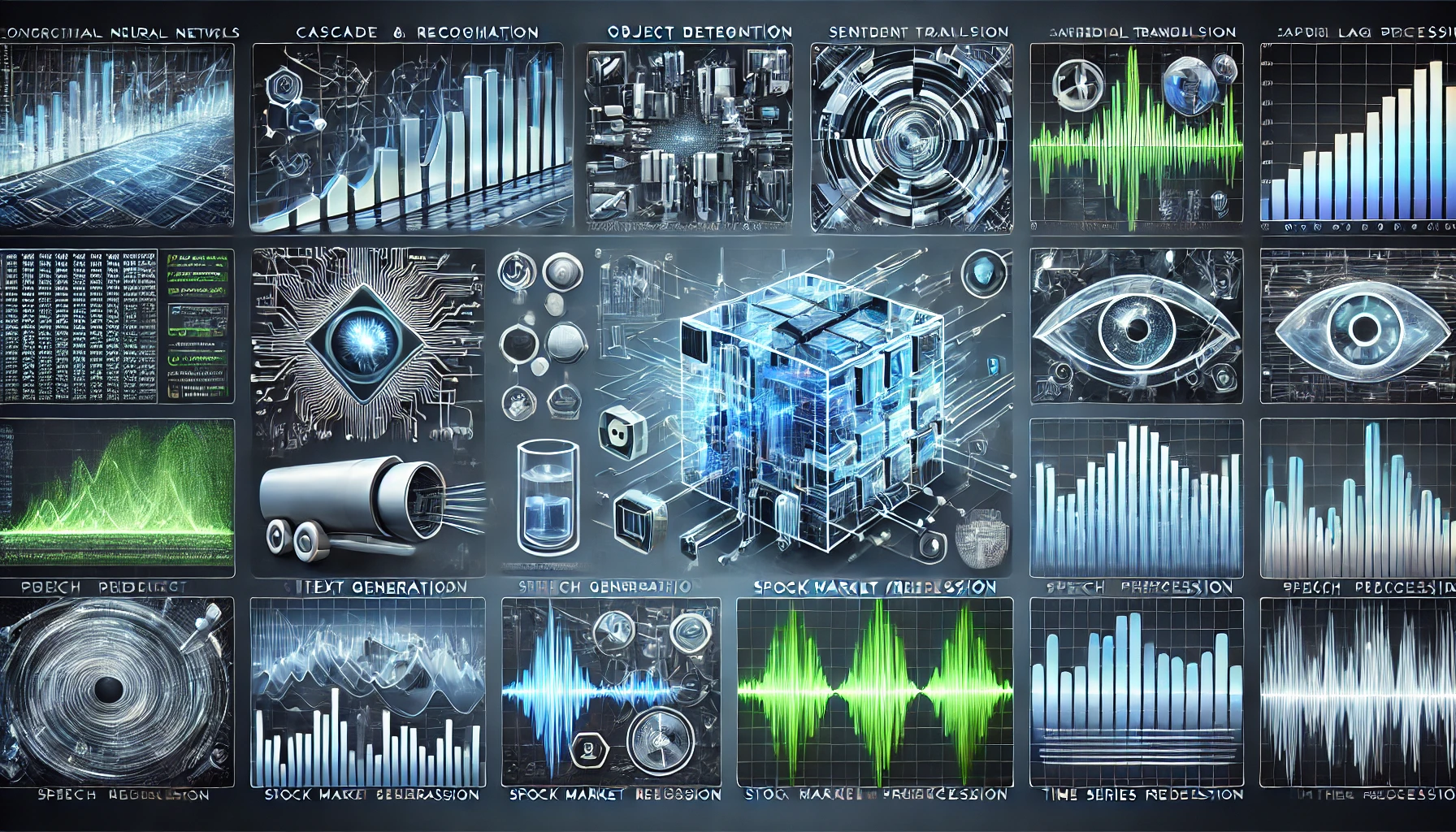

Image and Video Analysis

CP-C-RNNs are widely used for object detection, recognition, and tracking, as well as video classification and event detection.

Natural Language Processing (NLP)

- Sentiment Analysis, Machine Translation, and Text Generation: CP-C-RNNs excel in understanding language patterns, making them ideal for NLP tasks.

Speech Recognition and Audio Processing

CP-C-RNNs help convert speech to text by processing sequential audio features efficiently.

Time Series Forecasting

- Stock Market Prediction, Weather Forecasting: The combination of CNNs and RNNs allows CP-C-RNNs to predict future data trends based on historical patterns.

Challenges and Solutions in Cascade and Parallel CNN-RNN Networks

Computational Complexity and Training Time

Training CP-C-RNNs can be computationally expensive due to the complexity of both cascade and parallel approaches. Optimization techniques and faster hardware can help mitigate these issues.

Overfitting and Model Generalization

The complexity of CP-C-RNNs can lead to overfitting. Regularization techniques like dropout and cross-validation help improve generalization.

Data Scarcity and Labeling Challenges

Large labeled datasets are required for training CP-C-RNNs. Transfer learning and semi-supervised approaches offer solutions to reduce the need for extensive labeled data.

Future of Cascade and Parallel Convolutional RNNs

Emerging Trends in CP-C-RNNs

Hybrid models integrating CNNs, RNNs, and other neural networks are expected to become more prevalent, improving performance in real-time AI applications.

Innovations in Hardware and Software

New GPU accelerations, edge computing, and distributed processing will continue to advance the capabilities of CP-C-RNNs and make them more scalable.

Conclusion

Key Takeaways

- Cascade and Parallel Convolutional RNNs are revolutionizing the way we process sequential data.

- These networks combine the spatial feature extraction power of CNNs with the sequential processing ability of RNNs to improve performance and efficiency.

The Potential of CP-C-RNNs in Transforming AI

With their ability to handle complex data efficiently, CP-C-RNNs are paving the way for innovations in autonomous systems, healthcare, and robotics.

FAQs

-

What is the advantage of using cascade and parallel architectures in neural networks?

- Cascade and parallel architectures allow for improved accuracy and faster processing by handling data at multiple levels and enhancing parallel computation.

-

How do Cascade Convolutional Recurrent Neural Networks improve RNN performance?

- Cascade structures help RNNs handle long-term dependencies by passing information through layers in a sequential manner, while CNNs extract features more efficiently.

-

Can CP-C-RNNs be used for real-time applications?

- Yes, with the use of parallel processing, CP-C-RNNs can significantly speed up computations, making them suitable for real-time applications such as video processing and speech recognition.

-

What are the main challenges in training CP-C-RNNs?

- The challenges include increased computational complexity, overfitting, and data scarcity, all of which can be mitigated using optimization techniques and transfer learning.

-

How are Cascade and Parallel Convolutional RNNs used in healthcare?

- CP-C-RNNs can analyze sequential medical data like patient monitoring data to predict outcomes, track health progress, and aid in early diagnosis.

Hey there, You’ve done a fantastic job. I will definitely digg it and in my opinion recommend to my friends. I’m confident they’ll be benefited from this web site.

Thank you so much! I really appreciate the support and recommendation — it means a lot.

Keep up the wonderful piece of work, I read few posts on this site and I conceive that your web site is really interesting and has circles of good information.

Thank you for your kind words! We’re glad you find our website interesting and informative. Your encouragement means a lot and motivates us to continue creating valuable content.

I would like to thnkx for the efforts you have put in writing this blog. I am hoping the same high-grade blog post from you in the upcoming as well. In fact your creative writing abilities has inspired me to get my own blog now. Really the blogging is spreading its wings quickly. Your write up is a good example of it.

Thank you so much for your generous feedback! We’re thrilled to hear that our writing has inspired you to start your own blog. It’s wonderful to know our content made a positive impact, and we’ll continue striving to deliver high-quality posts. Best of luck with your blogging journey!

I have been browsing on-line more than 3 hours lately, yet I never found any fascinating article like yours. It is pretty value sufficient for me. In my opinion, if all site owners and bloggers made just right content material as you probably did, the internet shall be a lot more useful than ever before.

Thank you very much for your generous feedback! We’re truly honored that you found the article valuable and worth your time. Comments like yours motivate us to continue creating high-quality content that benefits our readers. We sincerely appreciate your support.

I am continuously looking online for posts that can benefit me. Thank you!

Thank you! We’re glad you found the post helpful. We hope our future content continues to provide value and support your learning.

I like this web blog very much, Its a really nice billet to read and receive info .

Thank you for your kind words! We’re glad you enjoy the blog and find the information useful. Your support is greatly appreciated!

Good – I should definitely pronounce, impressed with your website. I had no trouble navigating through all tabs as well as related information ended up being truly easy to do to access. I recently found what I hoped for before you know it in the least. Quite unusual. Is likely to appreciate it for those who add forums or something, site theme . a tones way for your client to communicate. Nice task.

Thank you for your kind and detailed feedback! We’re glad to hear you found the website easy to navigate and the information accessible. Your suggestion about adding forums or more ways for users to communicate is appreciated and will certainly be considered. Thanks again for your support!

I like this blog very much, Its a rattling nice position to read and find information. “Being powerful is like being a lady. If you have to tell people you are, you aren’t.” by Margaret Hilda Thatcher.

Thank you! We’re glad you enjoy the blog and find it informative. That’s a powerful quote—truly inspiring. Your feedback means a lot to us!

I think other website owners should take this website as an model, very clean and excellent user pleasant style and design.

Thank you! We’re glad you appreciate the design and user-friendliness of our site. Your feedback is very encouraging and means a lot to us!

Thank you so much! We’re thrilled that you find the website clean and user-friendly. Your encouragement means a lot and motivates us to keep delivering quality content.

I think this internet site contains some very excellent information for everyone :D. “Heat cannot be separated from fire, or beauty from The Eternal.” by Alighieri Dante.

Thank you! I’m glad you found the information valuable, and that’s a beautiful quote—appreciate you sharing it.

Very well written information. It will be supportive to anyone who employess it, including me. Keep doing what you are doing – looking forward to more posts.

Thank you so much! I’m glad you found it helpful. I really appreciate the encouragement—more posts coming soon!

I dugg some of you post as I thought they were extremely helpful invaluable

Thank you! I’m glad you found the posts helpful and valuable—I really appreciate your support!

Pretty nice post. I just stumbled upon your weblog and wished to say that I’ve really enjoyed surfing around your blog posts. In any case I will be subscribing to your feed and I hope you write again very soon!

Thank you! I’m glad you enjoyed exploring the blog. I really appreciate you subscribing to the feed, and I’ll be posting more content soon!

Howdy! This post could not be written any better! Reading through this post reminds me of my previous room mate! He always kept talking about this. I will forward this post to him. Fairly certain he will have a good read. Many thanks for sharing!

Thank you so much! I’m glad it brought back memories, and I appreciate you sharing it—hope your roommate enjoys the read too!

I have not checked in here for some time as I thought it was getting boring, but the last few posts are great quality so I guess I’ll add you back to my everyday bloglist. You deserve it my friend 🙂

Thank you, I really appreciate your honesty and the kind words 🙂

I’m glad you enjoyed the recent posts, and it means a lot that you’re adding the site back to your daily blog list. I’ll definitely keep focusing on high‑quality, useful content. Thanks for sticking around and supporting the work!